That means email is the preferred method. However, it’s an incredibly noisy environment. Think: a Speed Metal concert filled with screaming babies.

So how would your B2B marketing email cut through the clutter and stay out of the spam zone? There’s a lot of internet advice on how to create the best darn email (we might have similar Email Marketing advice too!).

But a lot of these websites miss one important thing to teach you: you must test your emails and test them well.

Why Should I Test My Emails? They Work Fine!

What if you could improve your email’s open and click-through rates with just a little tweak?

That’s exactly what Deckers saw after testing a mobile responsive version of their emails:

The results of these A/B tests definitely leaned in favor of using responsive design. The emails that used media queries saw a:

- 10% increase in click-through rates

- 9% increase in mobile opens (iPhone opens went from 15% to 18%)"

Their click-through rates increased by 10%. That’s 10% more visitors to their website, blog post, or a product page. That’s 10% more chances to turn visitors into buyers.

A chance worth taking, no?

In addition, emails break with surprising regularity. Your beautifully crafted email in HTML can look remarkably different across email clients. As we know, ugly emails turn off visitors.

So, to answer the question: “Is it a waste of time to test emails?”

Yes...only if you don’t want to improve, and your visitors don’t matter.

Read: 6 Things Content Marketers Should Know About Effective Email Marketing

Let’s Test Our Email Marketing. How Do I Get Started?

The answer: What, How, and Check.

- What to test in your email

An email is made up of many elements, like subject line, images, and copy. While we’d love to test every element, sometimes it’s just not feasible with looming deadlines—that's why we need to focus on testing the most important things first, one at a time.

- How to test your email’s effectiveness

A/B tests measure the effect of email variations. It’s a lot like conducting a science experiment where you take two emails, tweak one, send both, and monitor results.

- Check for email integrity

Emails can break. Here’s where we check and make sure they don’t break across different email clients.

For the rest of this blog post, I’ll go through each component in detail.

1. The What: Pick One Variable to Test

This list of 18 things to test (compiled from Marketo and Kissmetrics) is our go-to list for email testing. I’ve bolded five of the easiest things to test first.

- Subject line

- Email layout

- Email headline

- Body text

- Offer specificity

- Call-to-action (number, placement)

- Closing text

- Images

- Mostly-images vs. mostly-text

- Font (styles, colour)

- Copy length

- Copy tone (human vs. corporate)

- Sending (Day of the week, time of day, frequency)

- From name

- First name personalisation (subject line or email body)

- Links vs. buttons

- Number of links

- Social icons

If you’re new to testing, I suggest you start with a subject line and email headlines first. It’s fairly easy to generate variants and get the tests running quickly.

Once you’ve decided on your test variable, it’s time to move on to the tests.

2. The How: A/B Test Email Variations

The A/B Test—also known as split testing—is like a science experiment.

At its core, the test sends at least two email versions to randomly selected people to determine which does better. Like all good science experiments, we have variables and controls. We only change one element in the email (e.g. a button, an image, a copy) and measure two important metrics: open rate and click-through rate.

For example, I’m putting out a company newsletter. Not many people have opened the previous newsletters. I want to see if a chirpy subject line gets more opens (go back up to “The What: Pick One Variable to Test” to see why I picked this variable).

To conduct the A/B test, I need two versions:

- Version 1: The original newsletter with the usual subject line

“Constructing News: Visions, AI, computers and more”

- Version 2: The newsletter same in every way except for a different subject line

“Are you an AI? And more news this week”

I take a subset of my email list—about 100 randomly selected folks. The randomly selected part is important to ensure that I don’t select people who might respond in a particular way. Then I split the list down the middle to get List A and List B.

I send Version 1 Newsletter to List A and Version 2 Newsletter to List B via an email service like MailChimp or Hubspot to track email opens, click-throughs, and other stats.

Once done, I wait…and wait…and wait for the results. It takes about four to six hours to get some data.

Suppose Version 2 beats Version 1 in open and click-through rates. I know that it’s due to our new chirpy subject line, since that’s the only thing that we changed. Hence, I conclude that newsletters with chirpy subject lines work better. And proceed to the next test.

Halt! Before sending out the variants, I need to check their integrity.

3. The Check: Email Integrity Across Clients

Strictly speaking, this step isn’t a test for effectiveness. It’s quality assurance for emails.

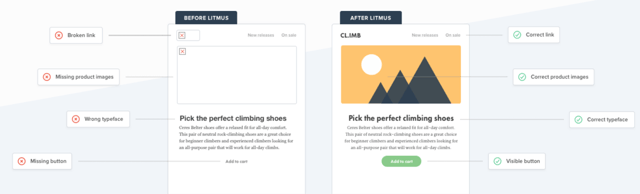

As mentioned in the beginning, emails break with astonishing regularity across different email clients—from Outlook to Gmail to iOS Mail. But you don’t have to send an email to all the different clients. There’s an easy way to do this: Litmus.

It’s an email checking tool that displays how an email looks like on a mobile screen, desktop screen, and within different email clients. This makes checking for broken emails far simpler and easier. And it prevents sending out ugly emails.

Email sanity check courtesy of Litmus

So, you decided on one thing to test. You made your email variants and checked for broken bits. Just press “send” and you’ve conducted your first test.

Well done! Before we go…

Final Words: Testing Should Be in Your Marketing DNA

Emails are a B2B marketer’s best friend. It’s ubiquitous, everyone expects promos via emails, and emails—even B2B emails—are ridiculously easy to create.

Still, the magic that makes your email better than another marketer’s email isn’t just creativity or great copy or great offers. The magic lies in relentlessly testing your emails to get that uptick in open & click-through rates from your email list. After all, there are many tools, templates, and ideas that make our marketing lives so much easier. That’s why we should keep testing and optimising our emails—be it for B2B or B2C clients or even for ourselves.

As a final note, we’ve developed our “What, How, and Check” method over the years of creating and blasting emails. I think it’s a tad cumbersome. So, I’d love it if you could share email tests and hacks that worked well for you.

Until then, test well and optimise better!

Image sources and credits:

Header image: ra2studio @ DepositPhotos

Body image: AnSim @ DepositPhotos

Screenshot from Litmus